In the first four fulldome shows that Morehead produced, we didn’t have to do much collaboration with outside groups. We’d sometimes contract out a writer or the composer, but for the most part, our productions were created almost completely in-house. That all changed when we met with Donovan Zimmerman from Paperhand Puppet Intervention and decided to collaborate on our newest show in production, The Longest Night. Paperhand had been putting on stage performances in North Carolina for over 10 years and we loved their style, aesthetic and message. But collaborating with a completely non-digital group presented some challenges.

The basic plan was for Paperhand to write the script, we’d take it and produce storyboards and pre-viz. After that we’d shoot live action of Paperhand’s giant puppets on green screen, do the digital production and finally, Paperhand would score the show. Every step of the way we’d make sure to COLLABORATE, meaning that we weren’t just dividing up the tasks, but we were bouncing ideas off each other and making sure we were both happy every step of the way. Easier said than done.

The first challenge was that Paperhand had never been involved with a dome production. In fact, they’d never been involved with a film production of any of their shows. So we were starting from scratch. The first thing I like to do is to explain the basic steps in making a show:

1. Concept & Script

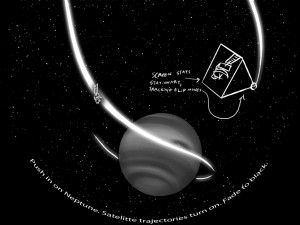

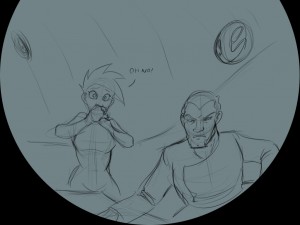

2. Storyboards & Concept Art

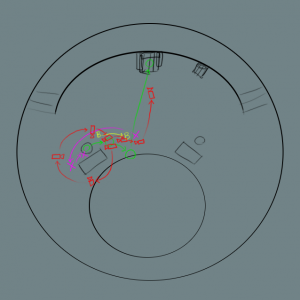

3. Animatics/Pre-Viz & Scratch Audio

4. Production (film and 3D) & Sound FX/V.O.

5. Music, Narration and Post

But another thing I like to show to newbies is this great video from Cirkus Productions out of New Zealand about the ABCs of the animation process.

Once we were fairly certain they wrapped their head around the basic process, we had to make sure that they understood the differences between STAGE, SCREEN and DOME productions. Luckily, in some ways, a dome production with live actors is actually more similar to a stage production than a film. It’s just that the stage surrounds the audience and we can move from scene to scene much more quickly.

As you know, you don’t cut on the dome like you do in a film. Instead, you need to draw the viewer’s eye to what is important using other techniques. Paperhand had plenty of experience working in that manner. So once we had convinced them that it wouldn’t work to cut to a close up of an object or character and got them back using their old school techniques, things worked much more smoothly. Oddly enough, this was one situation where the less “film” knowledge worked out to our advantage.

What they weren’t prepared for is the limitations of the color green when shooting on a green screen. It’s tough when you’ve been making puppets for 15 years with green in them for someone to tell you that you can’t use that color. If all else fails and they ended up having green on their puppets, we could have painted the room blue.

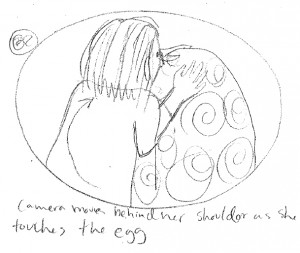

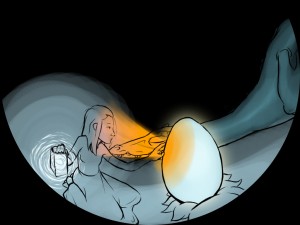

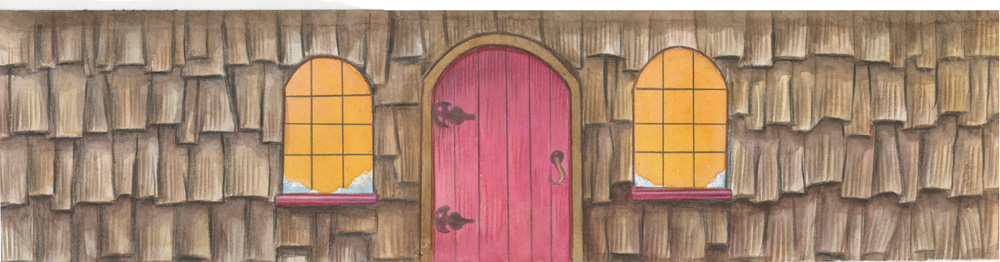

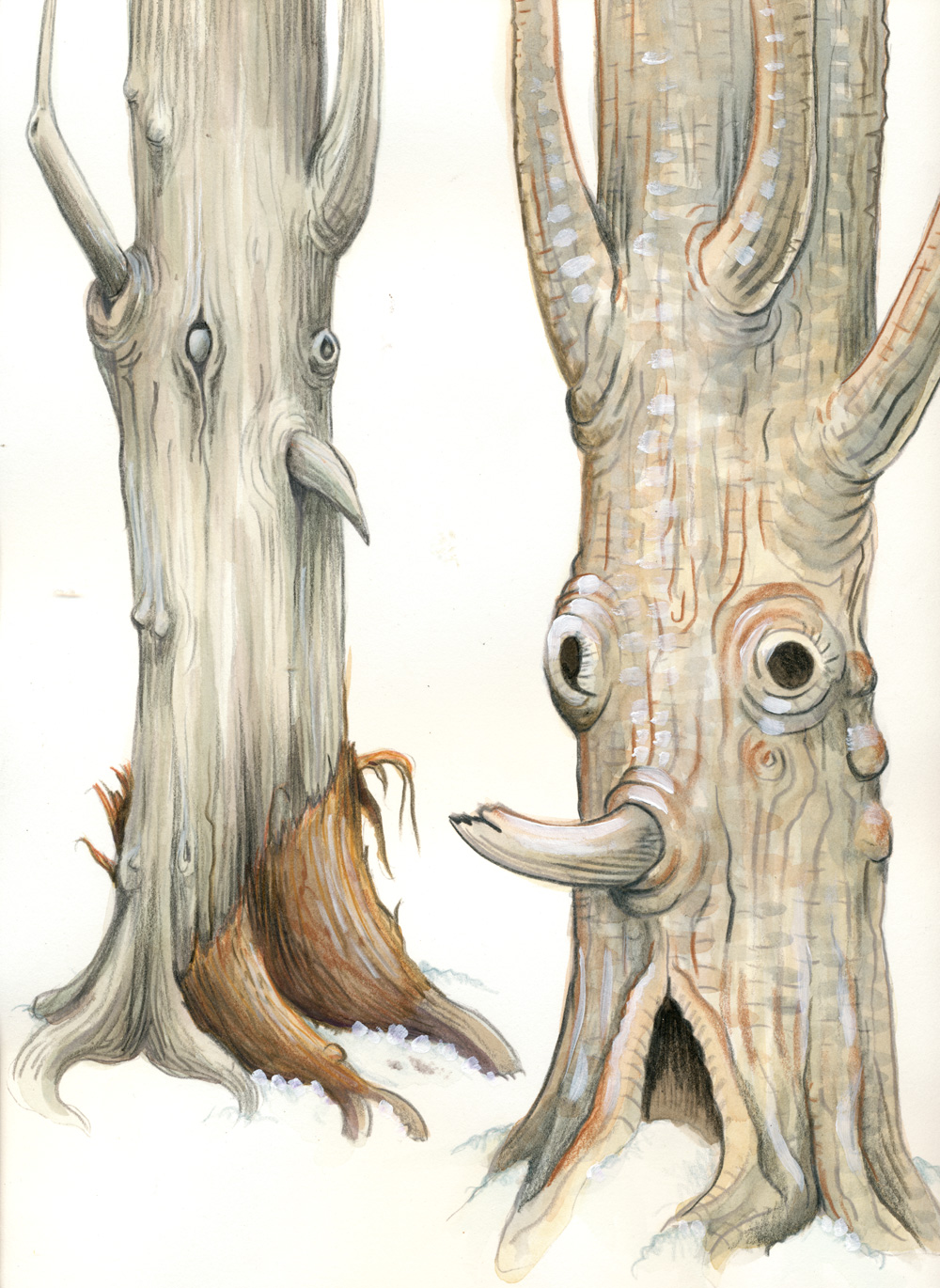

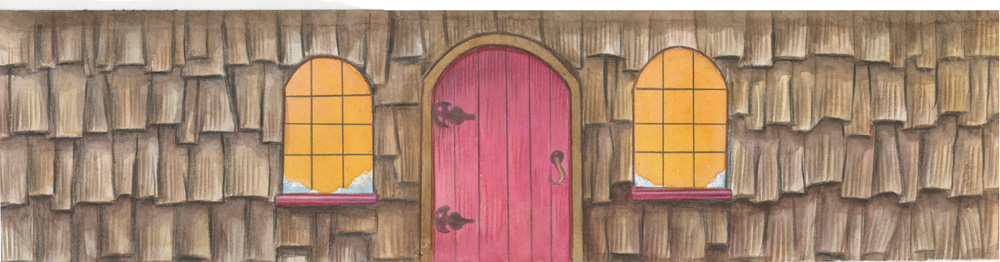

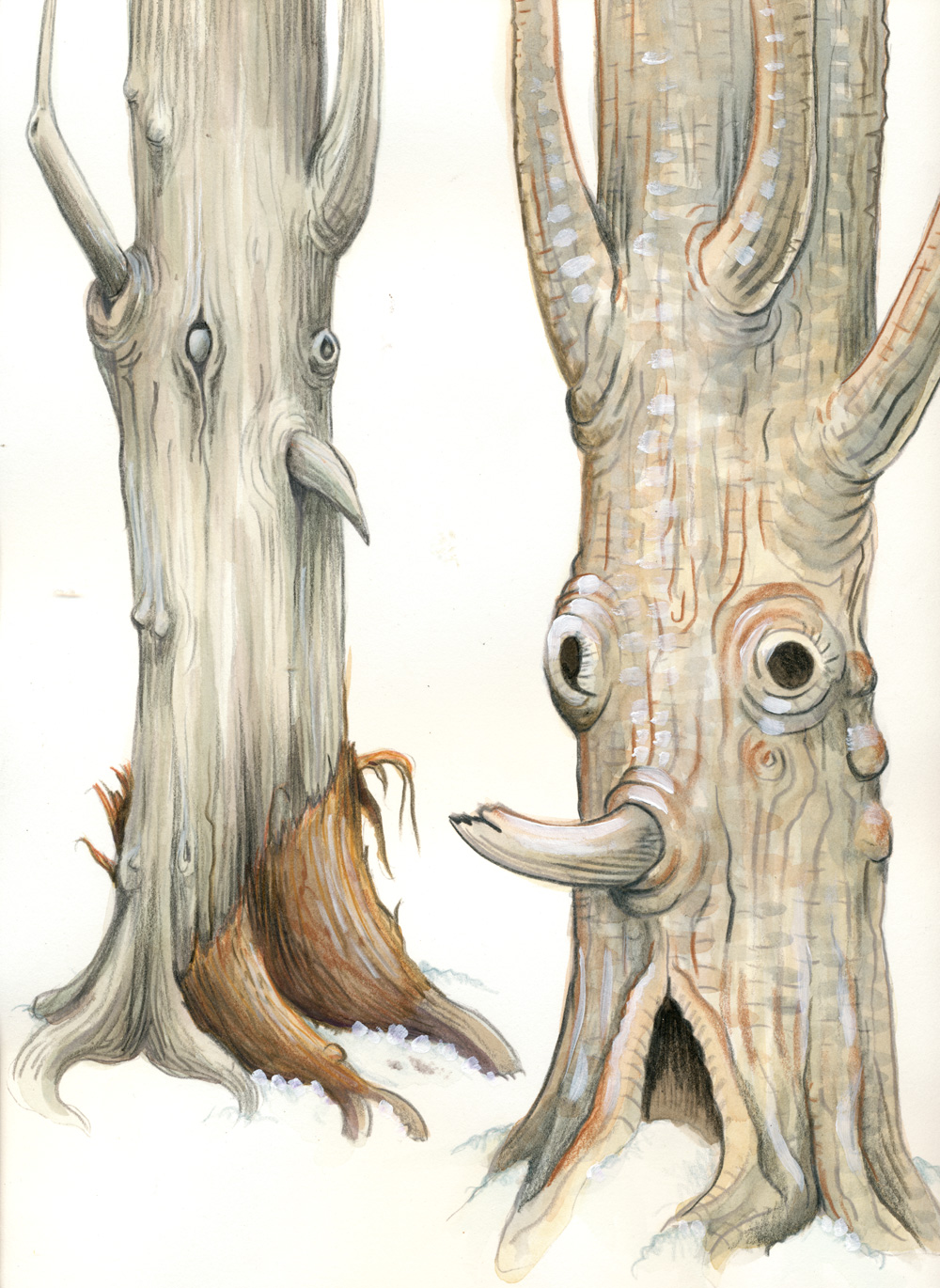

The next thing we had to explain to our new non-digital friends was the beauty of digital magic. At first, they assumed that when creating concept art or pieces for the production, they’d have to make them life sized. They also thought that if we needed 200 trees, they’d have to paint 200 trees. They were happy to hear about the copy and paste functions in After Effects. They were also happy to realize that we could tweak colors very easily in the system without them having to paint new versions. The great thing is that we ended up with a lot of fantastic concept art. Here are some examples:

Some of us at Morehead are heading down to Baton Rouge this week for IPS and Domefest and we’ll be up on the big screen a lot. Join us in Sky-Skan’s Zendome (the biggest dome in the dome village) at 3:15 on Wednesday for a screening of Solar System Odyssey. Clips from two of our shows also made it into Sky-Skan’s Best of Fulldome reel during their main presentation. Lastly, we’re happy to announce that Jeepers Creepers is a Juried Finalist and on the Juried Show Reel for Domefest this year. See you there!

Some of us at Morehead are heading down to Baton Rouge this week for IPS and Domefest and we’ll be up on the big screen a lot. Join us in Sky-Skan’s Zendome (the biggest dome in the dome village) at 3:15 on Wednesday for a screening of Solar System Odyssey. Clips from two of our shows also made it into Sky-Skan’s Best of Fulldome reel during their main presentation. Lastly, we’re happy to announce that Jeepers Creepers is a Juried Finalist and on the Juried Show Reel for Domefest this year. See you there!